为学习spark,虚拟机中开4台虚拟机安装spark3.0.0

底层hadoop集群已经安装好,见ol7.7安装部署4节点hadoop 3.2.1分布式集群学习环境

首先, width="1175" height="378" title="image" alt="image" src="https://img2020.cnblogs.com/blog/1393680/202007/1393680-20200710102254683-1448424533.png" border="0">解压

[hadoop@master ~]$ sudo tar -zxf spark-3.0.0-bin-without-hadoop.tgz -C /usr/local[hadoop@master ~]$ cd /usr/local[hadoop@master /usr/local]$ sudo mv ./spark-3.0.0-bin-without-hadoop/ spark[hadoop@master /usr/local]$ sudo chown -R hadoop: ./spark

四个节点都添加环境变量

export SPARK_HOME=/usr/local/sparkexport PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

配置spark

spark目录中的conf目录下cp ./conf/spark-env.sh.template ./conf/spark-env.sh后面添加

export SPARK_MASTER_IP=192.168.168.11export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoopexport SPARK_LOCAL_DIRS=/usr/local/hadoopexport SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

然后配置work节点,cp ./conf/slaves.template ./conf/slaves修改为

masterslave1slave2slave3

写死JAVA_HOME,sbin/spark-config.sh最后添加

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_191

复制spark目录到其他节点

sudo scp -r /usr/local/spark/ slave1:/usr/local/sudo scp -r /usr/local/spark/ slave2:/usr/local/sudo scp -r /usr/local/spark/ slave3:/usr/local/sudo chown -R hadoop ./spark/

...

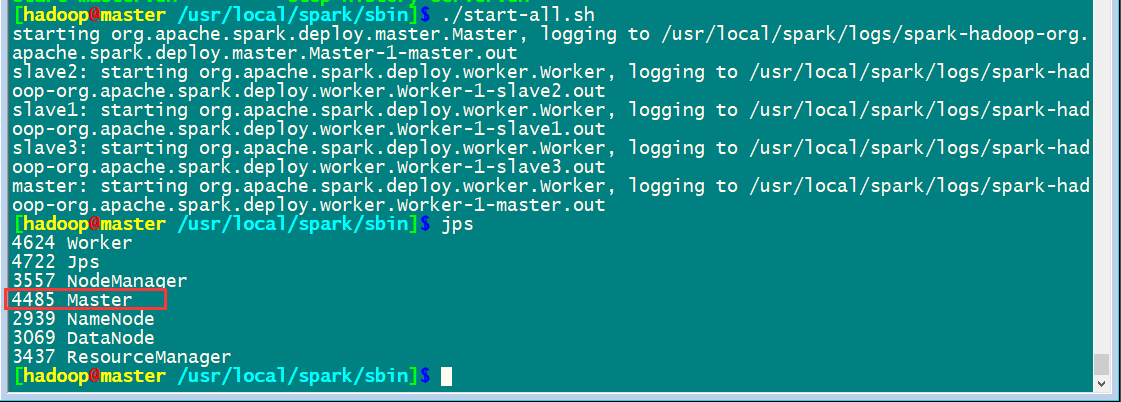

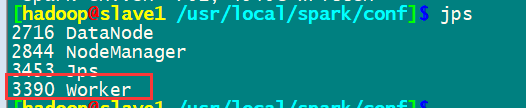

启动集群

先启动hadoop集群/usr/local/hadoop/sbin/start-all.sh

然后启动spark集群

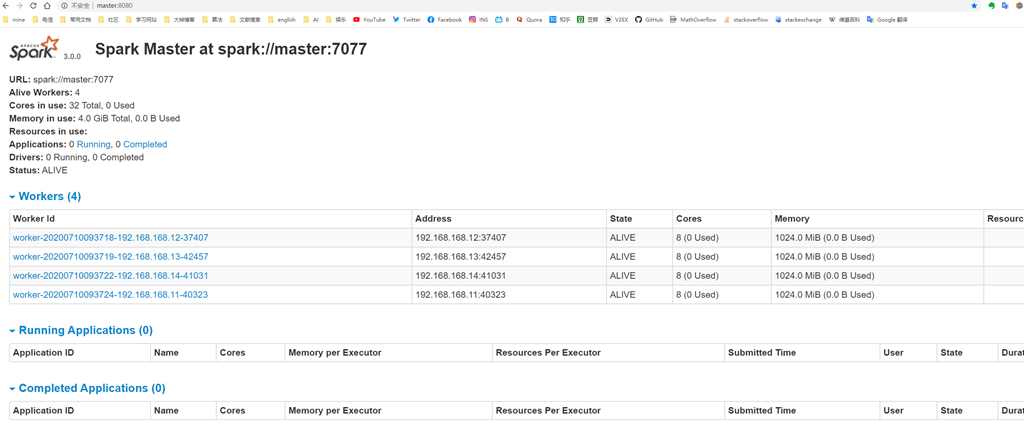

通过master8080端口监控

通过master8080端口监控

完成安装

ol7.7安装部署4节点spark3.0.0分布式集群haofang、 刘小东、 易佰、 这三封电子邮件可帮你赶走90%的跟卖卖家!!!、 亚马逊FBA如何避免断货,断货后怎么处理?、 跨境电商圈大卖家的城市布局网络,了解一下、 组团去桂林、 组团去桂林、 组团去桂林、

没有评论:

发表评论